In an era where the pace and volume of verbal communication continue to accelerate, the ability to capture, understand, and act on what is said, without delay or distortion, has become increasingly critical. Every day, individuals participate in meetings, presentations, and conversations filled with decisions, questions, and knowledge. Yet much of this value is lost to poor recall, fragmented tools, or a lack of contextual awareness during the moment of speech.

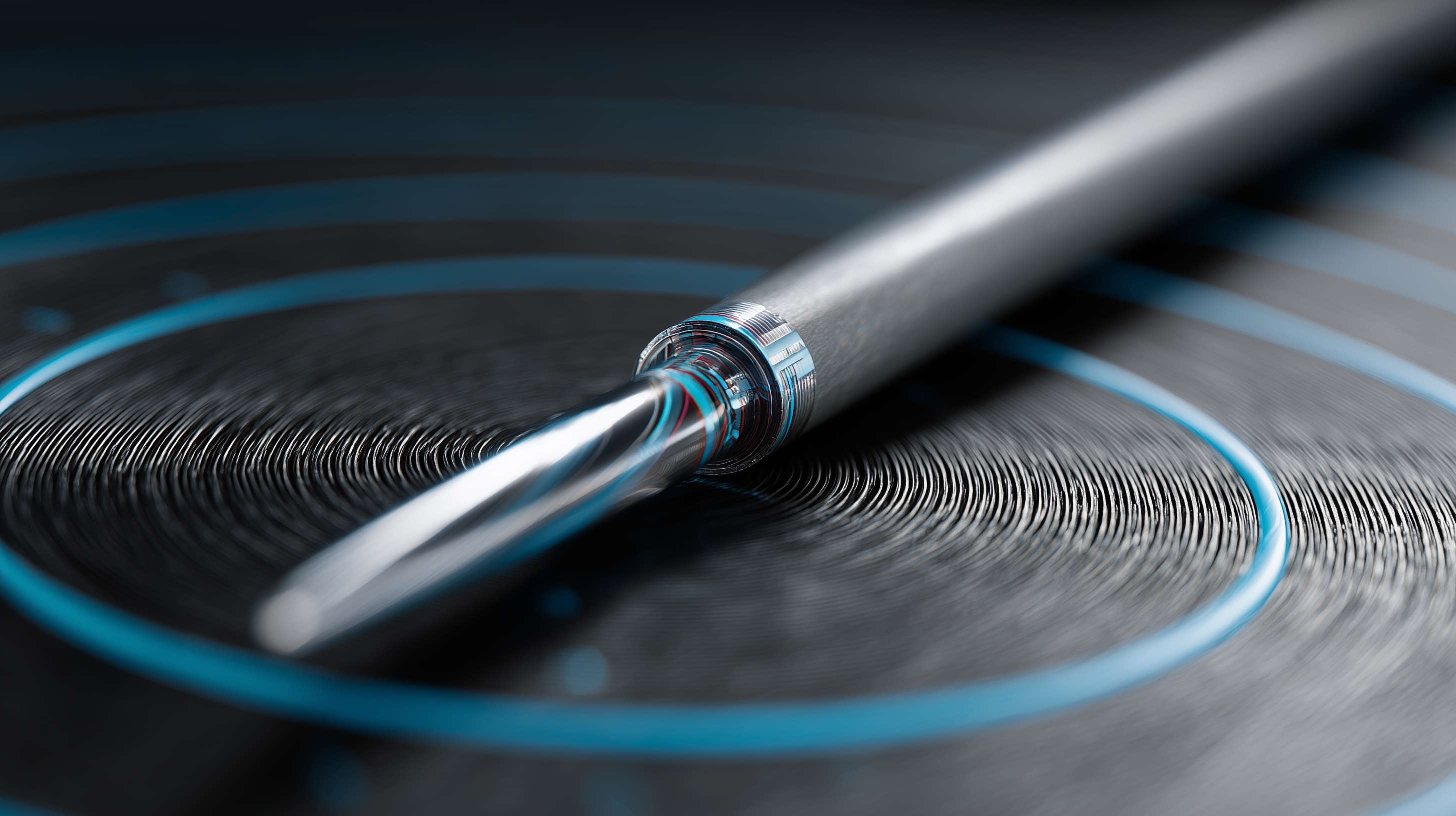

The study presented here explores the design and implementation of a system capable of enhancing human cognition during live verbal interactions. Rather than focusing solely on transcription, the platform introduces mechanisms for real-time diarization, topic extraction, and dynamic content suggestion. By aligning speech recognition with the user's cognitive and contextual needs, the system aims to reduce the information overload typically associated with high-volume, high-stakes communication.

The work culminates in a distributed architecture that unifies real-time capture, intelligent processing, and interactive augmentation – offering a new paradigm for how we engage with spoken content across both physical and virtual settings.

The domain of automatic speech recognition (ASR) has matured rapidly, driven by advancements in deep learning and the proliferation of large-scale language models. Commercial platforms like Otter.ai, Rev, and Notta provide users with the ability to transcribe audio from uploaded files or virtual meeting platforms. While these tools offer reasonable accuracy under ideal conditions, they consistently fall short in areas critical to real-time understanding, such as speaker differentiation, structured editing, and contextual content delivery.

A comparative analysis conducted during the study reveals a consistent gap: existing applications rarely support live diarization or dynamic, editable transcripts. Furthermore, none offer personalized, real-time suggestions related to the ongoing conversation.

The technical divide becomes starker when live speech is involved. Transcription from uploaded files benefits from full-context access and post-processing time. Real-time transcription, by contrast, must operate on fragmented inputs with minimal delay, often in noisy environments with overlapping speakers. The complexity increases further when users require the ability to edit transcriptions as they appear, extract actionable insights, or navigate multi-speaker sessions in diverse acoustic conditions.

While some models, such as RNN-Transducers and Conformers, have shown promise in low-latency scenarios, their application in dynamic, live settings remains limited. Diarization remains an open challenge in ASR pipelines, with most systems relying on post-event segmentation rather than real-time identification of speaker turns. Existing diarization models are not robust to natural overlaps or variations in speech patterns, especially outside English-language contexts.

Meanwhile, research in human-in-the-loop editing of transcriptions is nascent. Early studies show that users can significantly improve accuracy through real-time correction, but most interfaces are not designed for collaborative or live transcription refinement. Finally, few systems integrate large language models into the live speech processing pipeline—missing an opportunity to surface context-aware insights during the conversation itself.

The study adopts a system architecture optimized for modularity, scalability, and responsiveness. The foundation is a stateless, horizontally scalable web server that orchestrates communication across three primary components: a desktop application for audio capture, a web frontend for interaction and visualization, and a backend layer responsible for data storage, post-processing, and integration with external AI services.

At the desktop layer, a custom framework captures audio from multiple sources, including the microphone and system output, ensuring full coverage of the user’s speech and incoming audio during meetings. This is particularly relevant for remote collaboration tools that restrict third-party integrations. The captured streams are combined and transmitted as a single, real-time audio stream using linear PCM at 16kHz, a format chosen for its compatibility with commercial ASR APIs.

Azure Cognitive Services provides the baseline ASR functionality. However, this output is treated as provisional. Immediate transcription segments are returned to the user with minimal delay but may contain significant noise. These are later replaced by final segments, which are then passed through a secondary layer powered by a large language model. This LLM performs semantic correction, adds punctuation, restores proper casing, and reevaluates speaker attribution.

Real-time diarization is implemented through an end-to-end neural model that combines frame-wise and block-based approaches. The system uses attractor mechanisms to maintain speaker identity across turns, even in cases of speech overlap or interruptions. Models are optimized to balance latency and accuracy, with dynamic adjustments based on conversation density and number of participants.

The frontend supports real-time transcript manipulation. Users can edit, annotate, or censor text as it appears, with changes reflected immediately across all participants via a WebSocket-driven communication layer. Text segments can be tagged with metadata, including keywords or context notes, which the system tracks for downstream analysis.

The platform includes a suggestion framework that activates when users select segments of the transcript. These requests are handled by a plugin-based engine powered by an LLM. The engine considers the user’s historical context, preferences, and the selected text to fetch relevant external content—such as profiles, definitions, or related articles—from integrated sources. This mechanism transforms the system from a passive recorder into an active cognitive assistant.

Study Details

The work is driven by the hypothesis that transcription alone does not suffice in environments where comprehension, retention, and immediate decision-making are critical. Instead, a system must contextualize spoken information, adapt to speaker behavior in real time, and surface relevant content as the conversation unfolds. The study sets out to build a distributed, AI-powered platform that augments verbal communication by solving a series of interdependent technical challenges:

- Capture high-fidelity audio from multiple sources without latency bottlenecks.

- Transcribe speech in real time, in multiple languages.

- Identify speakers dynamically during the session (diarization).

- Enable live editing of transcripts without disrupting the stream.

- Extract topics and summarize discussions as they happen.

- Deliver contextual suggestions based on selected text and user prompts.

Key metrics include transcription latency, diarization accuracy, system responsiveness under concurrent edits, and relevance of suggested content.

The architecture is centered on a stateless web server that orchestrates data flow across desktop, backend, and web layers. WebSocket communication ensures low-latency synchronization between components, allowing bidirectional, event-driven updates between the user interface and backend processing services.

To guarantee consistent input quality, a custom audio capture framework is developed. It abstracts audio devices into reusable components (AudioDeviceCapture, AudioBufferMixer) that can be combined to route audio from both microphone and system output. The resulting audio stream is encoded in PCM and sent to the transcription service.

Azure Cognitive Services handles speech recognition. Immediate transcription segments are returned as-is and labeled as provisional. These are pushed to the frontend interface, where users can begin to interact with them. As final versions arrive, they replace provisional ones.

The diarization component is optimized for low latency and capable of resolving overlaps, speaker turns, and ambiguous transitions. In scenarios where diarization fails or degrades, such as simultaneous speech or similar voice signatures, the LLM-enhanced post-processing step re-evaluates speaker assignments using semantic cues.

The transcript editor supports user actions such as censorship, annotation, and correction. These edits are applied to a mutable layer of the transcript while the original remains preserved. This dual-layer model ensures traceability and allows rollback or auditing of user actions. All edits trigger real-time updates across other user sessions through stateful WebSocket broadcasts.

When a user highlights text and requests additional information, the backend forwards the request to a large language model along with relevant user metadata and the selected transcript. The LLM chooses the appropriate plugin – such as a web search, internal document fetch, or public knowledge base query, and returns filtered results.

Evaluation and Results

We conduct validation on both Portuguese and English data. For Portuguese-European, the base transcription from Azure shows critical weaknesses: lack of punctuation, broken sentence boundaries, and incorrect speaker labeling. After LLM post-processing, transcripts are structurally correct, semantically coherent, and assigned to appropriate speakers.

On average, the time between receiving a final transcription and delivering a processed version is 51.1 seconds for Portuguese and 58.4 seconds for English. These intervals balance real-time usability with processing depth. Notably, the processing window allows for segment-level refinement, enabling a continuous feedback loop rather than batch revision.

The diarization model, though challenged by overlapping speakers, benefits significantly from the combination of initial real-time labeling and post hoc semantic correction. In multiple test cases, misattributions are corrected within two steps of the transcription lifecycle.

The suggestion engine performs consistently under varied prompts. Users receive context-aware content relevant to the highlighted segment and their role. Latency for these suggestions averages under one minute, with most responses delivered in 20–40 seconds depending on plugin query time.

The system addresses persistent gaps in knowledge retention, meeting productivity, and decision-making quality. It reduces manual note-taking effort, mitigates the risk of missed information, and empowers participants to request clarification without halting the conversation.

Industries that rely on accurate verbal exchange – such as healthcare, law, consulting, education, and public policy – can benefit from reduced error rates, improved compliance, and higher operational efficiency. The platform also increases accessibility for individuals with hearing impairments or language barriers, offering structured transcripts and contextual explanations on demand.

The system serves as a model for how AI can support – not replace – human understanding. Rather than automating decisions, it creates a structured layer of information that enables users to comprehend more, act faster, and remain fully engaged in the moment. As future iterations reduce latency and increase language coverage, this form of augmentation has the potential to redefine the role of speech in professional and collaborative contexts.